Apple may update Siri as it struggles with ChatGPT

[ad_1]

The hype machine is real with Generative AI and ChatGPT, which are seemingly everywhere in tech these days. So it’s not surprising that we’re starting to hear chatter about a new, improved Siri. In fact, 9to5Mac has already spotted a new natural language system.

Do you speak my language?

The claim is that Siri on tvOS 16.4 beta has a new “Siri Natural Language Generation” framework. As described, it doesn’t sound impressive, as it mainly seems focused on telling (dad?) jokes, but might also let you to use natural language to set timers. It is codenamed “Bobcat.”

These whispers follow a recent New York Times report on Apple’s February AI summit. That report claimed the event saw a degree of focus on the kind of generative content and large language models (LLM) used by ChatGPT. It also said Apple’s engineers are “actively testing” language-generating concepts by kicking new language concepts around every week as Apple seeks to move AI forward.

So, is it building a ChatGPT competitor? Not really, according to Bloomberg.

“Hey Siri, how do you spell ‘catch-up’?”

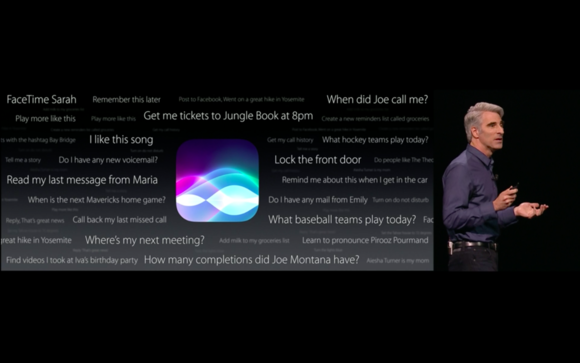

While Siri seemed incredibly sophisticated when it first appeared, development hasn’t kept pace, giving Apple’s cheeky voice assistant echoes of MobileMe and Ping. Like both Apple fails, Siri had promise it never quite lived up to and now lags assistants from Google and Amazon, despite being a little more private.

Siri’s lack of contextual sense means it’s really only good at what it’s trained to do, which limits its abilities; GPT seems to leave it in the dust. With the recent GPT-4 update, OpenAI is innovating fast. We can already see this has lit a fire under the big tech firms. Microsoft has adopted ChatGPT inside Bing, Google’s pressing fast forward on Palm development and Amazon is pushing hard on AWS Chat (the latter now integrated within Microsoft Teams).

Apple — and Siri — seem out on a limb.

Not the one and only

Of course, Siri is not the only machine intelligence (MI) Apple works on. In some domains, such as accessibility and image augmentation, it has achieved insanely good examples of MI done right. But, somehow, Siri still makes mistakes.

I’m not entirely sure how Apple’s Steve Jobs would have handled that — I can’t see him being happy when his HomePod tells him it can’t find his Dylan tracks. The difference between the two voice-capable AIs is I could ask GPT to create a picture of him throwing that smart speaker at the wall.

In part,this is because of how Siri’s built.

How they made Siri

Siri is sort of a huge database of answers for different knowledge domains supplemented by search results sourced in Spotlight, and natural language interpretation so you can speak to it. When a request is made, Siri checks that it understands the question and then uses deep/machine learning algorithms to identify the appropriate response. To get that response, it makes a numerical assessment (confidence score) of the likelihood it has the right answer.

What this means is that when you ask Siri a question, it first has a quick look to see if this is a simple request (“switch on the lights”) it can fulfill swiftly from what it already knows, or if it needs to consult the larger database. Then it does what you ask it to do (sometimes), gets you the data you need (often) or tells you it doesn’t understand you or asks you to change a setting hidden somewhere on your system (too often).

In theory, Siri is as good as its database, which means the more answers it has popped inside the better and more effective it becomes.

However, there’s a problem. As explained by former Apple engineer John Burkey, the way Siri is built means engineers must rebuild the entire database to upgrade it. That’s a process that can take up to six weeks.

This lack of real learning makes Siri and other voice assistants as “dumb as a rock,” according to Microsoft CEO Satya Nadella. You’d expect him to say something like that, of course, as Microsoft has billions invested in ChatGPT, which it is weaving inside its products.

Generative AI, on the other hand

Generative AI (the kind of intelligence used in ChatGPT, Midjourney, Dall-E and Stable Diffusion) also uses natural language, its own databases and search results, but can also make use of algorithms to create original-seeming content such as audio, images, or text.

You can ask it a question and it will rifle through all available data and commit a few decisions to spin a result.

Now, as has been noted quite frequently since people began exploring the tech, those results aren’t always great or original, but they do usually seem convincing. The capacity to ask it to generate deepfake videos and photos takes this even further.

In use, one way to see the difference between the two AI models is to think about what they can achieve.

So, while with Siri you may be able to ask for a map of Lisbon, Portugal, or even source directions to somewhere on that map, Generative AI lets you ask more nuanced questions, such as what parts of the city it recommends, to write a story with the action based in that city, or even create a spookily accurate fake photo of you sitting in that really lovely bar in Largo dos Trigueiros.

It’s pretty clear which AI is the most impressive.

What happens next?

It doesn’t need to be this way. Developers have managed to create apps to add ChatGPT to Apple’s products. watchGPT, which was recently renamed Petey – AI Assistant for trademark reasons, is a great example.

Apple is unlikely to want to cede such a competitively important technology to third parties, so it’s likely to continue working toward its own solution, but this could take years — during which Siri may still fail to open that cabin door.

However, given that GPT-4 costs up to 12 cents per thousand prompts, Apple is highly unlikely to weave it into its operating systems in a direct sense. With an installed base beyond a billion users, doing so would be hugely expensive, and Microsoft is already there.

It’s in that context Apple might simply bite the bullet to make it easy for its developers to add support for OpenAI’s tech in the apps they make, effectively passing the cost to them and their customers.

That might help in the short term, but I’m convinced this is fire in the belly for Apple’s machine intelligence teams. They will be twice as determined now to evolve further innovation in the natural language processing that is core to both technologies.

But at this stage in terms of implementation they do appear to have fallen behind. Though appearances, as GPT-generated images show, can be deceptive.

Please follow me on Mastodon, or join me in the AppleHolic’s bar & grill and Apple Discussions groups on MeWe.

Copyright © 2023 IDG Communications, Inc.

[ad_2]

Source link